Big Data is Thriving. Is RDBMS Dead?

MapReduce vs. RDBMS

People think that MR is this new transformative technology…..new? No. Transformative? Yes.

Although it might seem that MapReduce (MR) and parallel DBMSs are different, it is actually possible to write almost any parallel-processing task as either a set of database queries or a set of MR jobs.

When you look at the semantics of the MR model, you’ll find that its approach to filtering and transforming individual data items (tuples in tables) can be executed by a modern parallel DBMS using SQL. Even though the Map operations are not easily experssed in SQL, many DBMSs support user-defined functions (UDFs) which provides the equivalent functionality of a Map operation. The Reduce step in MR is equivalent to a GROUP BY operation in SQL.

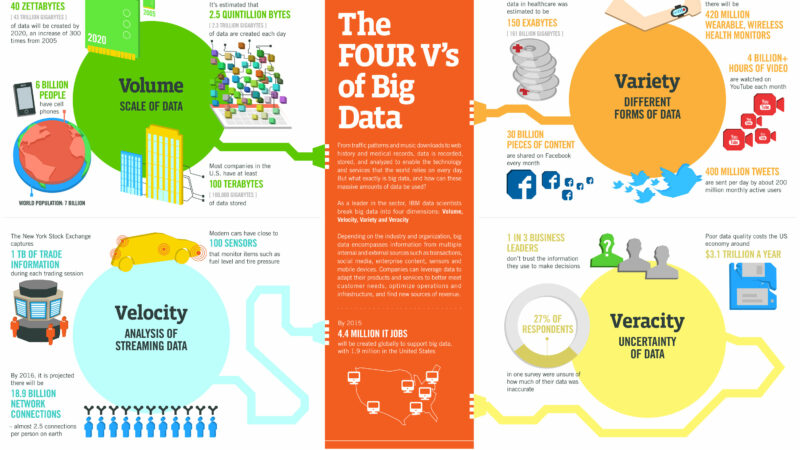

So, if we’ve been able to perform MR-like operations with RDBMSs, what’s the big deal? Well, it has something to do with the fact that new generations of technologists like to do things differently. Things evolve. Just look at the difference between a data scientist (today) and the data analyst (yesterday), and you begin to understand why Hadoop / MapReduce has become transformative. Here are a few thoughts…

Big Data = Big Simplicity

One of the big attractive qualities of the MR programming model (and maybe it’s key attraction to the new generation of data scientists and application programmers) is its simplicity; an MR program consists of only two functions – Map and Reduce – written to process key/value data pairs. Therefore, the model is easy to use, even for programmers without experience with parallel and distributed systems.

It also hides the details of parallelization, fault-tolerance, locality optimization, and load balancing. Those experienced with RDBMS and SQL will naturally say that writing SQL code is easier than writing MR code. However, we should probably ask the next-gen data scientist and application developer what is easier. Lets ask the top 7 data scientists.

Unlike a DBMS, MR systems do not require users to define a schema for their data. Thus, MR-style systems easily store and process what is known as “semi-structured” data. Such data can often be made to look like key-value pairs, where the number of attributes present in any given “record” varies. This style of data is typical of Web traffic logs, for example, derived from disparate sources. If you were going to attempt to do this in an RDBMS, you would have to create a very wide table with many attributes to accommodate multiple record types (using NULLs for the values that are not present for a given record). This is where columnar databases come into play. They allow for reading only the relevant attributes for any query and automatically suppress the NULL values. However, if you had your choice, then the choice is NO SCHEMA. Of course, there are always tradeoffs.

In addition, MR implementation provides the best IT user-experience. It’s not complicated to install and get a MR system up and running. Whereas, a high-end RDBMS (even IT and DBA-friendly Teradata) will require installation and configuration which exceeds that of MR. Although some may argue that tuning a Hadoop cluster is needed to maximize performance. Configuraiton/tuning aside, once a RDBMS is up and running properly, programmers must still write a schema for their data, and then load the data set into the system. Whereas with MR, MR programmers load their data by simply copying it into the MR file system (HDFS).

Where Does MR Shine?

Even though parallel DBMSs are able to execute the same semantic worload as MR, there must be several application use-cases where MR is consistently the better choice.

We routinely hear that Hadoop / MapReduce is being deployed in “data pipeline” use-cases (aka ETL). This makes sense because the canonical use of MR can be characterized in five operations:

- Read information from many different sources (structured and unstructured)

- Parse and clean the data

- Perform complex transformations (such as “sessionalization”)

- Decide what attribute data to store

- Load the information into a data store (file system, RDBMS, NoSQL data store, graph DB, etc)

This is analogous to the extract, transform, and load phases in ETL systems…MR is taking raw data and creating useful information that can be consumed by another storage system.

MR-style systems also excel at complex analytics. This is because in many data mining applications, the program must make multiple, iterative, passes over the data. Such applications cannot be structured as single SQL aggregate queries, requiring instead a complex dataflow program where the output of one part of the application is the input of another. MR is a strong fit for such applications. Take a look at Andrew Ng’s work (over five years ago), Map-Reduce for Machine Learning on Multicore. Futures consist of taking the MADlib project and porting it to MR as part of the Mahout project.

See my broader list of use-cases here.

Total Cost Of Ownership

Just as in Cloud computing, one has to take the “total cost of ownership” (TCO) into account before you realize the real benefits. And when I say TCO…I mean the cost in terms of people, time-to-market, as well as hardware and software. When we perform a TCO in cloud, we summarize the traditional costs over five years and then reduce it down to $/user/month. This way you can compare it to the on-demand model.

In the BIG DATA space, we can do a host of similar analysis to get a true TCO as well. To keep things simple, lets look at the following BI / analytics example for the data scientist….a simple process as follows:

- Data load

- Data Selection

- Aggregation

- Join

- UDF Processing & Aggregation

Lets say we perform an analysis of web-log data of user-visitor data. We join this user data with a table of PageRank values, which consists of two subtasks/calculations:

*Subtask 1: Find the IP address (user) that generated the most revenue within a particular date range

*Subtask 2: Calculate the average PageRank of all pages visited during the particular date range interval

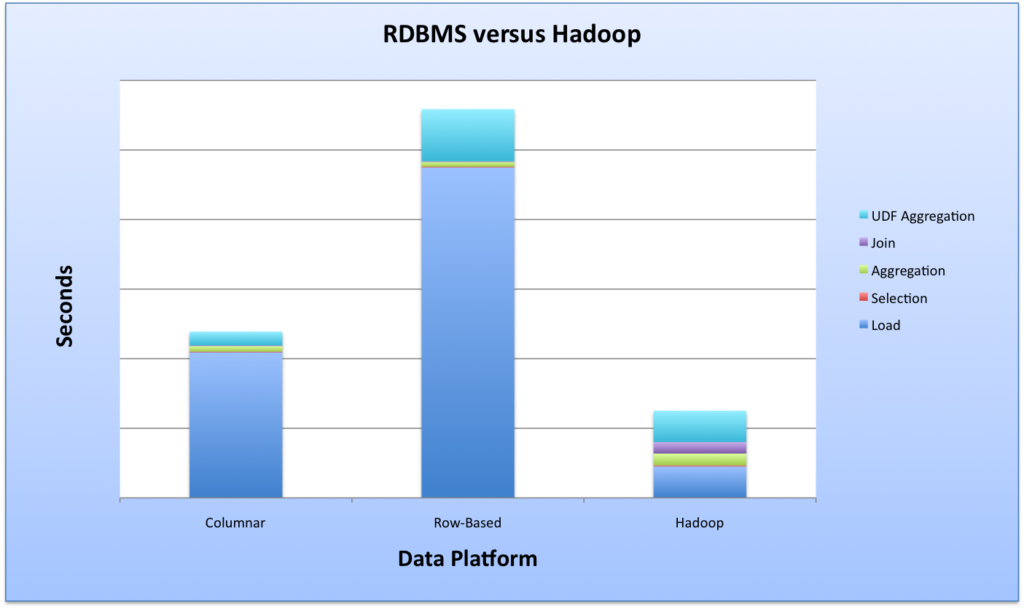

Here’s the result of this use-case:

At the surface, you might be inclined to compare just the join operation, for example, between the RDBMS and the equivalent MR function….concluding that MR is slower. But if we look at the entire end-to-end process for the data scientist, the picture might change. The above comparison between a Hadoop framework versus both a columnar and standard RDBMS provides some interesting thoughts regarding the process. I could add database / logical modeling, etc. and continue to fill out the picture. But you get the idea. [Note: I’m not including any specifics of the benchmark, because I’m trying to make a high-level, general point here, and don’t want to get caught up in the details.] So, I conclude with two thesis.

Thesis #1: if we’re talking about “discovery” environments where you need to perform iterative exploration of the data asking questions like, “what, why, what will, what if?”, you might need to consider the full end-to-end process when you decide which platforms are best for your organization.

Do you agree with this?

Lastly, why did I title this post, “Big Data is Thriving. Is RDBMS Dead?” Well, other than trying to be a bit controversial, I have had this philosophical discussion with my friends in the industry. The answer is obviously, “no”. But there is definitely an undertone that the Big Data Warehouse is storing more data than the traditional EDW, and the ETL and analytic tasks will begin to migrate off of the EDW (and associated data marts) into Hadoop Big Data marts.

Thesis #2: enterprises will begin to build big data warehouses that enable quick ETL operations, ultimately, supporting advanced analytic BI applications (which will no longer be supported by the EDW or associated data marts).

Agree with this?

what a crock of shit. your diagram doesn’t even have values. you have no idea what you’re talking about and therefore shouldn’t be posting information on this subject. go learn something first, then write something next.

Such a nice comment Aps Reine from Belguim! Not typically worth responding back to….

Lets see…I worked for Teradata for 10 years, one of the five guys who invented the BYNET which makes Teradata linearly scalable, have worked on some of the largest databases in the world, worked on the early TPC benchmarks…and who are you?

The data was removed on purpose…so people wouldn’t get caught up on the details…but focus on just the concepts.

Here is the study done with Stonebraker and others whom I respect:

http://www.cse.nd.edu/~dthain/courses/cse598z/spring2010/benchmarks-sigmod09.pdf