What Does HA in the Cloud Mean?

What is High Availability (HA)? My best definition is best depicted in terms of the following example:

99.9999% (“six nines”) system availability = only 31.5 seconds of unplanned down time per year, or 2.59 seconds per month, or 0.605 seconds per week.

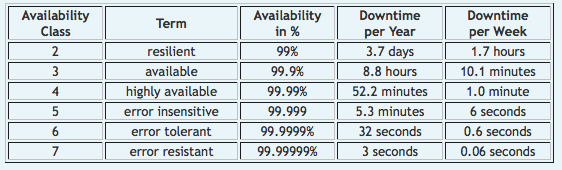

In short, HA is a system-level feature (architecture, design, software suite) which ensures system up-time. This aspect of your system becomes more important as the service becomes more mission critical. The following table provides “availability classes” based on associated downtime amounts (courtesy of Zimory):

HA is designed to preserve your most valuable resource: time. Every second your system is down costs you and your customers money, and every second your system administrator spends solving downtime problems costs you more.

The Harvard Research Group (HRG) divides high availability into its Availability Environment Classification (AEC) in 6 classes:

- Conventional (AEC-0): Function can be interrupted, data integrity is not essential.

- Highly Reliable (AEC-1): Function can be interrupted, data integrity must be however ensured.

- High Availability (AEC-2): Function may be minimum interrupted only within fixed times during the main operating hours.

- Fault Resilient (AEC-3): Function must be maintained, within fixed times during the main operating hours, continuously.

- Fault Tolerant (AEC-4): Function must be maintained continuously, 24*7 enterprise (24 hours, 7 days the week) must be ensured.

- Disaster tolerant (AEC-5): Function must be available under all circumstances.

Cloud HA

High availability in the cloud will exist at every level of the hardware and software stack including:

- Datacenter resources: power, air conditioning, etc.

- System hardware resources: storage, server, and networking.

- Operating system with bundled HA

- Database bundled HA

- Virtual machine HA

- Application HA (Web-server, ERP, etc.)

Datacenter HA

This typically means that you have redundancy built into your architecture by: 1) using multiple datacenters (leveraging redundancy across them), and 2) benefiting from redundancy for all resources within the datacenter (e.g. multiple air conditioners, etc.)…..this doesn’t include redundancy for the compute platform components.

The following definition for the classification of data centers is typically used:

- Tier 4: Has multiple active supply paths for power and air-conditioning, has redundant components, it is fault-tolerant and provides an availability of at least 99,995%

- Tier 3: Has multiple active supply paths for power and air-conditioning, with only one system active in standard use; has redundant components and is manageable at the same time and provides an availability of at least 99,982%

- Tier 2: Has one path each for power and air-conditioning; has redundant components and provides an availability of at least 99,741%

- Tier 1: Has one path each for power and air-conditioning; has redundant components and provides an availability of at least 99,671%

True story…to this day, I remember getting a page at 3am in the morning (I had my VP of Operations set up an automated text message sent to my phone for all Severity level 1 & 2 support calls). It so happened that at 2:45am the datacenter had an air conditioning outage. Of course, there was an automatic switchover to a redundant unit, which also failed.

I and my staff were notified of rising temperatures on our SaaS platform (at the time this platform was a 100 node cluster consisting of several sub-clusters). By the time the datacenter issue was addressed, our system had experienced such high temperatures that we began to detect failures in our database cluster (more pages). Although, database failover occurred automatically, multiple database servers failed intermittently (the worst kind of failure).

By the time we manually recovered from the database cluster failures, the customer had experienced approximately 228 minutes of what we classified as a “Severity 1” defect with a SLA which was significantly lower, of course.

Needless to say, I personally made some very uncomfortable calls to the customer, and we then made some additional changes to our system HA design to insure that this 3-sigma event wouldn’t occur again. I’ll add, this was not the only time we had datacenter-level issues. My other story was when the datacenter staff was testing their redundant battery backup and accidentally powered down our entire system and scrambled the brains of our Orca disk array subsystems!!!

Hardware HA

High availability for your hardware resources (computing, storage, and networking) begins with redundancy. With storage, my experience involves using disks in a RAID 5 configuration. In addition to this, we deployed 100% redundancy (RAID 1) across a separate cabinet preferably in a separate datacenter (e.g. RAID 5 on a server and then those disks mirrored on another server with RAID 5). This insured us against two disks dying within one storage subsystem, against a storage subsystem going down, against a cabinet going down, and against a datacenter going down (see my previous story).

Then we built in redundancy into our processing nodes by deploying commodity clusters (a number of Linux servers which were a part of the same resource pool servicing a suite of specific applications). Each of our servers where inherently redundant using a HA cluster configuration. In addition to this, we had redundant IO/controller cards (storage and network), redundant power supplies within the server, and redundancy built into our cabling schemes (e.g. redundant cables to NIC cards). We also made sure that servers within a certain functional cluster were distributed across multiple cabinets.

Lastly, our architecture included redundancy for all our switches (networking gear) similar to our compute nodes.

OS HA (Linux)

They don’t get much press, but there are many better high availability options available for Linux. Here are a few:

- High-Availability Linux Project: The grand-daddy of open source HA which provides the heartbeat failure detection daemon, the poetically named “shoot the other node in the head” fencing daemon and documentation needed to build your own an automatic-failover cluster.

- Linux Virtual Server: Primarily intended to provide scalability through load balancing, but also incorporates high availabiliy via heartbeat, failoverd and shared storage.

- Redhat Cluster Suite: Combines failover daemons with a reasonable graphical configuration panel with the GFS distributed file system. Integrates with commercial fencing hardware such as the HP/Compaq Integrated Lights Out API. Since Redhat is open source, all the components of this otherwise payware solution are also available in the excellent (and free) CentOS distribution.

Linux-HA is an open source project which offers software, Heartbeat, which is a daemon that provides cluster infrastructure (communication and membership) services to its clients. This allows clients to know about the presence (or disappearance!) of peer processes on other machines and to easily exchange messages with them. Heartbeat is also a leading implementor of the Open Cluster Framework (OCF) standard and when combined with a resource manager like Pacemaker, is competitive with commercial systems. The current stable series of Heartbeat can be obtained for many linux platforms (including CentOS, RHEL, Fedora, openSUSE and SLES).

The following Linux distributions are more likely to be deployed in larger datacenter environments. I also include a reference to HA features (commonly included as a cluster offering):

- RedHat Enterprise Linux: Red Hat Cluster Suite

- SUSE Linux Enterprise Server: High Availability Extension

- Oracle Enterprise Linux: Oracle Clusterware

- Ubuntu 9.04 Server Edition: Heartbeat/Pacemaker for clusters

- Mandrake Enterprise Server 5 (MES5): Mandrake Linux Clustering

RedHat’s HA offering, Red Hat Cluster Suite, provides a complete ready-to-use failover solution. For most other applications, customers can create custom failover scripts using provided templates.

Database HA

Many databases build HA into the RDBMS kernel. Oracle has taken the approach of building a set of tightly integrated HA features such as data protection, table repair, and database failover. Oracle Real Application Clusters (RAC) is the premier database clustering technology that allow two or more computers (“nodes”) in a cluster to concurrently access a single shared database. This database system spans multiple hardware systems, yet appears to the application as a single unified database. Oracle Database 11g includes Oracle Clusterware, a complete, integrated clusterware management solution available on all Oracle Database 11g platforms. Oracle Clusterware includes a High Availability API to make applications highly available. Oracle Clusterware can be used to monitor, relocate, and restart your applications.

Virtual Machine HA

Xen

An example of VM HA includes Citrix’s partnership with Marathon Technologies, whose EverRun product not only recovers and restarts the virtual machine and its software stack but the application data as well. EverRun monitors the exchanges between a running virtual machine and its hypervisor, detecting when work is interrupted and moving the VM to a healthy server.

In order to understand the enhanced levels of HA that everRun VM offers, it’s important to first understand what XenServer HA does and does not address.

- First, XenServer HA is a best effort solution; there is no guarantee of a restart on another host. This is because XenServer HA does not ensure that resources are allocated on other hosts, so it’s possible that a host failure might result in a situation where there is not enough resources to restart the VMs from that failed host. This would be similar to disabling availability constraints in VMware HA and allowing the HA cluster to power on more VMs than could be supported in the event of a host failure. In this regard, XenServer HA is a bit less powerful than VMware HA.

- Second, XenServer HA heartbeats will not only leverage network interfaces, but will also send heartbeats across storage adapters as well. This is a significant advantage over VMware HA, as it helps to eliminate the dependency upon the network connectivity to the Service Console/Dom0.

With that in mind, adding everRun VM to an existing XenServer HA implementation now adds some useful new functionality:

- everRun will setup a separate compute environment (another VM) on a separate host to reserve memory in the event of a failure. This enables everRun to provide guaranteed recovery in the event of a host failure.

- everRun VM provides component-level fault tolerance, meaning that if a host’s storage connectivity is lost, it can fail that connectivity over to a different host. This is accomplished through the use of private interconnects between members of the everRun VM resource pool. In my mind, this is pretty powerful stuff. Being able to fail disk I/O from the HBA in this server to the HBA in a different server over a set of interconnects is really powerful.

VMware

VMware HA simply provides failover protection against hardware and operating system failures within your virtualized IT environment. VMware Fault Tolerance provides continuous availability for applications in the event of server failures, by creating a live shadow instance of a virtual machine that is in virtual lockstep with the primary instance. By allowing instantaneous failover between the two instances in the event of hardware failure, VMware Fault Tolerance eliminates even the smallest of data loss or disruption.

Application HA

Application software (including the base OS) is covered by a host of independent solutions including (but not limited to):

- Tivoli System Automation (IBM)

- Advanced Server (Red Hat)

- Lifekeeper (Steeleye/SIOS)

- Polyserve

A central concept with most high-availability solutions, is the system heartbeat. One server sends a signal to the other to determine system and application health. Heartbeat communication path options include serial port and LAN. It is a good idea to use multiple paths, such as serial and LAN or multiple LAN connections.

The failover models include active/active, active/standby and N+1. In active/active configuration, each server in the cluster is providing its own set of applications and services. If one fails, the other takes over. Users may experience some degradation of services, because the remaining system is serving both sets of applications and services, although it does allow for maximum resource utilization.

Active/standby provides the best continuity of service after a failure. However, it requires a redundant system and the associated cost. In N+1 configuration, one standby system provides failover protection for multiple active systems. This configuration provides reasonable utilization of resources while minimizing cost. If multiple failures should occur, users still may experience some increase in response time. Alternately, other active servers could be configured to take over.

IBM’s Tivoli is one of the most recognized encumbants in the “legacy” system management software space, and one we always competed with while I was at NCR Corporation.

All these HA software suites helps meet high levels of availability and prevent service disruptions for critical applications and middleware running on heterogeneous platforms and now virtualization technologies. Functionality varies, but generally has a list similar to the following:

- Initiates, executes and coordinates the starting, stopping, restarting and failing over of individual application components or entire composite applications

- Provides standard toolset that supports multiple failover scenarios involving both physical and virtual environments

- Offers advanced clustering technologies, including support for sophisticated clustering configurations—such as n:1 or n:m configurations—to help reduce the number of required hardware servers

- Delivers advanced, policy-based automation to ease operational management of complex IT infrastructures by eliminating the need for extensive programming skills to create and maintain scripts and procedures

- Provides plug-and-play policy modules that integrate best practices for third-party software solutions, allowing out-of-the-box failure detection and recovery to help drive operational cost savings and continuous high availability

- Speeds recovery through the ability to define resource dependencies to quickly associate conditions with resources, enabling operators to choose the proper corrective actions within the right context

- Integrates with the broader portfolio of high availability and event automation offerings that enable systematic implementation and execution of high availability operations across applications, middleware and platforms

Applications vary considerably with typical classes including:

- Database servers

- ERP applications

- Web servers

- LVS director (load balancer) servers

- Mail servers

- Firewalls

- File servers

- DNS servers

- DHCP servers

- Proxy Caching servers

- Custom applications

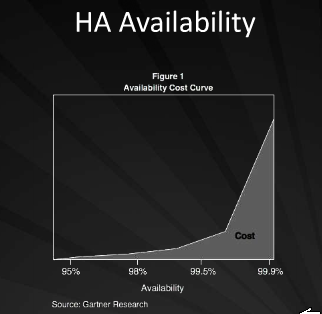

HA Comes at a Cost

The quick answer….YES! As you add HA within each level of the system, you’re paying more in hardware, software, and even IT resources (although some will say this expense should actually decrease with the HA automation).

The Future of HA

Is there room for anything outside of VMware?

High-availability processing was pioneered by the long-defunct Tandem Computing, whose NonStop computers twinned and shadowed every hot-swappable component, with transfer of processing between “sides” under the supervision of the Guardian NonStop Kernel (NSK) operating system.

If you substitute “VMware ESX” for “Guardian NSK” in the above paragraph, you have an almost exact description of VMware’s plans for the future of a high-availability computing architecture. In this model, virtual computing resources, including CPU, storage and memory, are added and replaced on the fly, while workloads are shadowed by virtual machines (VMs) and entire data centre operations can be migrated without interruption to different physical processing locations.

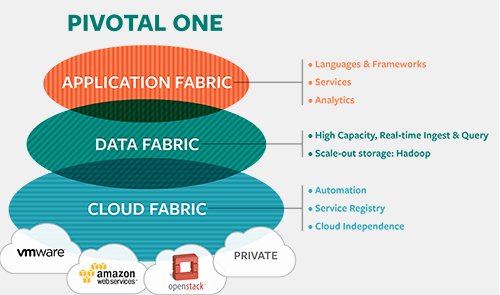

Other Opportunities

There’s a much broader set of high availability functionality than VMware HA. What if you want to use multiple clouds? Say you want to have AWS and Zimory provide you services and you balance across them? Why not? In the digital media world, I used both Alkamai and Limelight, leveraging one against the other and using one as a failover in case response times didn’t meet my specs.

What if you want to failover between geographic locations? Sure Amazon EC2 provides the ability to place instances in multiple locations, but lets dig a little more into this. Amazon EC2 locations are composed of Regions and Availability Zones. Availability Zones are distinct locations that are engineered to be insulated from failures in other Availability Zones and provide inexpensive, low latency network connectivity to other Availability Zones in the same Region. By launching instances in separate Availability Zones, you can protect your applications from failure of a single location. Regions consist of one or more Availability Zones, are geographically dispersed, and will be in separate geographic areas or countries. The Amazon EC2 Service Level Agreement commitment, however, is only 99.95% availability for each Amazon EC2 Region, and Amazon EC2 is currently only available in two regions: one in the US and one in Europe. Will this suffice for all enterprise requirements? Maybe not. Is there an opportunity to provide HA across public cloud providers, across different geographic regions? Maybe.

One thought on “What Does HA in the Cloud Mean?”

Comments are closed.