Datacenter Evolution

Mainframes

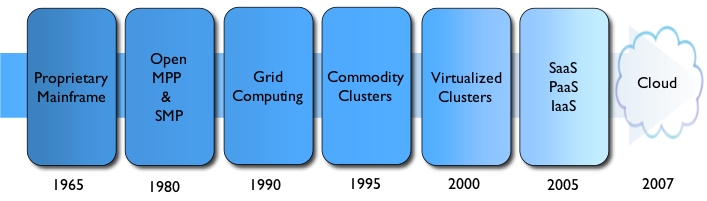

So if we take a little trip down memory lane, we can quickly appreciate how things progressed to today’s over-hyped cloud computing space. Back in the 1950s to early 1970s, “IBM and the Seven Dwarfs” introduced the mainframe computer. This group included IBM, Burroughs, UNIVAC, NCR, Control Data, Honeywell, General Electric and RCA (disclosure: I worked for NCR for over 10 years). Nearly all mainframes had (have) the ability to run (or host) multiple operating systems, and thereby operate not as a single computer but as a number of virtual machines. The mainframe serviced Fortune 1000 with critical applications (typically bulk data processing) including census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.

MPP & SMP

Shrinking demand and tough competition caused a shakeout in the market in the early 1980s. Companies found that servers based on parallel microcomputer designs (predominantly categorized as either MPP or SMP) could be deployed at a fraction of the acquisition price and offer local users much greater control over their own systems given the IT policies and practices at that time. Terminals used for interacting with mainframe systems were gradually replaced by personal computers network connected to servers.

Grid

Then entered the era of Grid Computing. Grid computing is the most distributed form of parallel computing. It makes use of computers communicating over the Internet to work on a given problem. Because of the low bandwidth and extremely high latency available on the Internet, grid computing typically deals only with embarrassingly parallel problems. The wave of Grid occurred in the 1990s with a way to solve big problems such as protein folding, financial modeling, earthquake simulation, and climate/weather modeling. The main use case of Grid was a single BIG application that required a large amount of dedicated resources.

Commodity Clusters

Now enters the most disruptive era of datacenter computing – namely, Cluster Computing which began to really take off in the mid-1990s. Clusters are loosely coupled “commodity” servers usually deployed to improve performance and/or availability over that of a single computer (e.g. mainframe, MPP, or SMP server), and also significantly more cost-effective than single computers of comparable speed or availability. One can argue that both Grid and clusters occurred simultaneously or perhaps in different order. However, since commodity clusters have consistently grown and Grid has practically died, it’s more simple to reflect history as Grid first, then replaced by Commodity Clusters. In 1995 the invention of the “beowulf“-style cluster—a compute cluster built on top of a commodity network for the specific purpose of “being a supercomputer” capable of performing tightly coupled parallel HPC computations. The transformative aspect of this platform was the use of low-cost equipment. A cluster is a system built using commodity hardware components, like any PC capable of running a Unix-like operating system, with standard Ethernet adapters, and switches. It does not contain any custom hardware components and is meant to be trivially reproducible.

Enters Virtualization

A virtual machine (VM) is a software implementation of a machine (i.e. a server) that executes programs like a physical machine. System virtual machines (sometimes called hardware virtual machines) allow the sharing of the underlying physical machine resources between different virtual machines, each running its own operating system. The software layer providing the virtualization is called a virtual machine monitor or hypervisor. A hypervisor can run on bare hardware (Type 1 or native VM) or on top of an operating system (Type 2 or hosted VM). This is similar to that deployed early on with mainframes. So why the renewed interest?

Several factors led to a resurgence in the use of virtualization technology among UNIX and Linux server vendors:

- Expanding hardware capabilities, allowing more simultaneous work to be done per machine

- Efforts to control costs and simplify management through consolidation of servers (reduced cap-ex)

- The need to control large multiprocessor and cluster installations (e.g. in server farms and render farms)

- The improved security, reliability, and device independence possible from hypervisor architectures

- The desire to run complex, OS-dependent applications in different hardware or OS environments

VMWare, which founded in 1998 and later entered into the server virtualization market in 2001 with VMware GSX Server (hosted) and VMware ESX Server (hostless), has dominated the virtualization market. Other leading platforms for virtualization include Citrix Xen, Red Hat KVM, Microsoft Hyper-V, Sun xVM Server, and Parallels, etc.

IaaS, PaaS, SaaS

Once the hardware upon which applications were built significantly came down in price, companies began pooling resources and enabling small to medium sized businesses access to compute resources on demand. These companies introduced web-accessible services referred to as “Infrastructure as a Service”, or “Platform as a Service”, or “Software as a Service” – XaaS where X = {I, P, S}…and what later became generally known as Cloud.

Infrastructure as a Service (IaaS) makes it very easy and affordable to provision resources such as servers, connections, storage, and related tools necessary over the Internet, allowing enterprises to build an application environment from scratch on-demand. IaaS are complex to work with but with that complexity comes a high degree of flexibility. Billing for these services is usually incremental by use and can get complex with tiered on-demand pricing. The best example of an IaaS provider is Amazon. Amazon does not offer a way to host your web applications out-of-the-box on their platform, but simply provides virtualized hardware on which you can do whatever you’d like to. This is the most basic of XaaS offerings.

Platform as a Service (PaaS) makes the deployment and scalability of your application trivial and your costs incremental and reasonably predictable – a platform on which you can deploy your applications. Example providers include Google and Microsoft. For example, Google, through it’s App Engine offer, enable you to host your websites and your data, and enable you to use some of their successful services like BigTable. Microsoft, offers a way to host your .Net applications with potential integration with some Microsoft services/applications.

The number one benefit of such a service is that for very little money, none in some cases, you can launch your application with little effort beyond having developing and and possibly some porting work if it’s an existing application. Additionally, there will be a large degree of scalability built into your PaaS choice by design. Finally, you will not need to hire a professional systems administrator more than likely as they are part of the service itself. If you are trying to keep your operations staff lean this can be a useful path to follow assuming your application will capitulate.

Software as a Service (SaaS) has been around for a while now. One of the first SaaS applications was SiteEasy, a website-in-a-box for small-businesses that launched in 1998 at Siteeasy.com. The most well-known vendors and useful examples of SaaS are GMail or Salesforce. SaaS applications differ from earlier applications delivered over the Internet (e.g. ASP) in that SaaS solutions were developed specifically to leverage web technologies such as the browser, thereby making them web-native. Also, the entire IaaS and PaaS technology layers are completely hidden from the customer. Customers of SaaS are only presented with the on-demand application and none of the IT behind it. This is the highest level of XaaS offerings.

Cloud Computing

When Amazon first offered a limited public beta of EC2 (Amazon Elastic Compute Cloud) on August 25, 2006 no one could have known how successful and transformative virtual resources over the Internet would become. So what is Cloud? It consists of IaaS, PaaS, and SaaS. It’s an umbrella term which generally is agreed to consists of the following three components:

- Scalable commodity computing, storage, and networking

- Virtualization

- Self-service applications over the Internet

The more detailed definition of Cloud computing is a style of computing in which dynamically scalable and now almost always virtualized resources are provided as a service over the Internet (Intranet or Extranet).

Cloud infrastructure (compute, storage, or networking) is about leveraging commodity hardware, and using the power of software to slice, dice and scale-out capacity and performance, while delivering a service over a network (intranet or extranet). A cloud hosting architecture is now achieved by making use of the existing virtualization and commodity clustering techniques. The provider using Cloud must also make use of a fail over or high availability (HA) system, which can guarantee dynamically scalable and reliable resources.