Enterprise Big Data Cloud

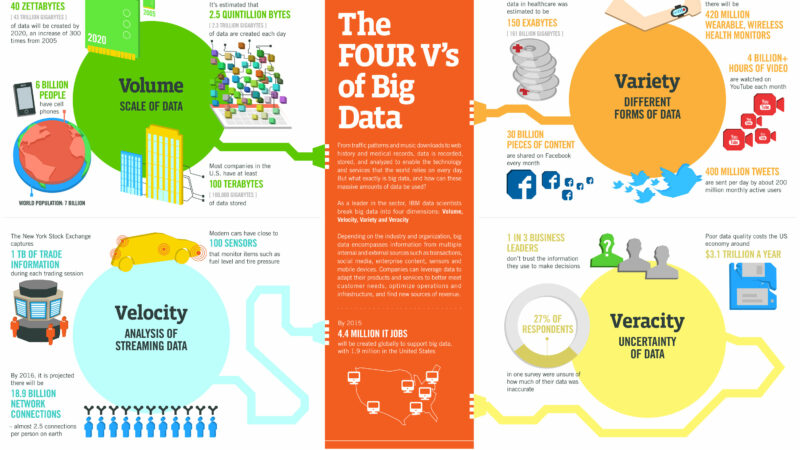

Big Data is confusing to most executives. It’s this nebulous concept of applying technologies from Yahoo!, Facebook, Linkedin, and Twitter in such a way that the organization will truly become data-driven and, equally as important, be able to do so quickly. Unfortunately, only a few companies are really realizing its full potential.

That’s why Infochimps is announcing its Enterprise Cloud – A Big Data cloud service built specifically for Fortune 1000 enterprises who want to rapidly explore how big data technology can unlock revenue from their data. The Infochimps Enterprise Cloud addresses several challenges holding back executives from quickly gaining value from this disruptive technology.

Enterprises are only leveraging 15% of their data assets

Enterprises, on average, capture and analyze about 15% of their data assets. Typical data sources include transactional data (who bought what). However, a 360-degree view of the business requires a 360-degree view of the customer, as well as manufacturing, supply chain, finance, sales, marketing, engineering, etc. Only by capturing 100% of the enterprise’s entire operational data and then supplementing it with external data (e.g. we recently were talking to one pharmaceutical company about using external claims data from 100+ health plans covering more than 70 million people), will you achieve maximum value from your data analytics. With the Infochimps Enterprise Cloud, you can not only combine 100% of your private data in a private cloud, but you can also supplement that data with another 100%+ of external data.

Time-To-Market constrained by infrastructure deployments

The deployment of, and value creation from, new disruptive big data technologies (Hadoop, NoSQL/NewSQL, in-stream processing) still takes a considerable amount of time, human and financial resources. Typical Enterprise Data Warehouse projects take 18-24 months to deploy. Simple changes to star-schema data models take 6 months minimum to be made available to internal development organizations. Hadoop projects, although less complicated than EDW, take about 12 months to deploy. With the Infochimps Enterprise Cloud, you can deploy value in 30 days.

Big Data talent hard to find

When I read articles about the gap between supply and demand for big data talent, I think to myself, “this is not a situation where analysts are collecting a sample of 10 companies and then generalizing it to the entire market.” It’s a real problem. If you are some “antiquated” Fortune 1000 company (you know who you are) looking to hire crazy smart engineers and data scientists from Facebook…well, sorry…you don’t have the corporate culture or the exciting environment that this talent enjoys. McKinsey forecasts that the demand and supply of talent needed is only going to get worse (60% gap by 2018). With the Infochimps Enterprise Cloud, you can leverage your existing talent. This is done by providing a simple but powerful abstraction between your application development team and the complex big data infrastructure.

One Big Data technology does not fit all

There are literally hundreds of DBMS / data store solutions today, supporting many different advantages based on data type and use-case. This creates the problem where business users and application developers get lost in the nuances associated with data infrastructure, and lose focus on the business needs. Don’t listen to a single data store vendor tell you that they can address all your business needs. You need several. With the Infochimps Enterprise Cloud, we force you to start with the business problem first, then we draw from a very comprehensive data services layer which addresses the needs of the business problem. Guess what? It’s not just Hadoop.

Infrastructure and data integration is the most challenging

Knowing how to integrate existing data infrastructure with new big data infrastructure and then complicating this with external data sources, makes integration a completely new problem. This is not a matter of simply upgrading your ETL tools. With the Infochimps Enterprise Cloud, we help you understand the “new ETL” used by our web-scale friends.

Open source is cheap, but not easily commercialized

Silicon Valley has created over 250,000 open source projects alone. Disruption is obviously occurring within the open source community. However, enterprises are not in a position to properly deploy, even with the many commercialization vendors. How does a company integrate several open-source solutions into one? With the Infochimps Enterprise Cloud, we support an end-to-end big data service, which consists of many commercial open source projects combined to offer real-time stream processing, ad-hoc analytics, and batch analytics as one integrated data service.

Data security + data volume both dictate deployment options

Only non-sensitive, publicly available data sets (e.g. Twitter) are using elastic public cloud infrastructure. Compliance/governance issues still require that data-sensitive analytics occur “behind the firewall”. Also, if you are an established enterprise with large volumes of data, you are not going to “upload” to the cloud for your analytics. With the Infochimps Enterprise Cloud, we provide public, virtual private, private, or hybrid big data cloud services that address the needs of big businesses with big problems.

Today, I’m pleased to announce the Infochimps Enterprise Cloud, our big data cloud running on a network of big data-focused data centers and being deployed by leading big data system integrators.

These are exciting times, indeed. Read the full press release here >>.

Related posts:

Era of Analytic Applications – Part 1

Era of Analytic Applications – Part 2

Big Data’s Fourth Dimension – Time

The Data Era – Moving from 1.0 to 2.0

5 thoughts on “Enterprise Big Data Cloud”

Comments are closed.