Traditional Analytics Approach

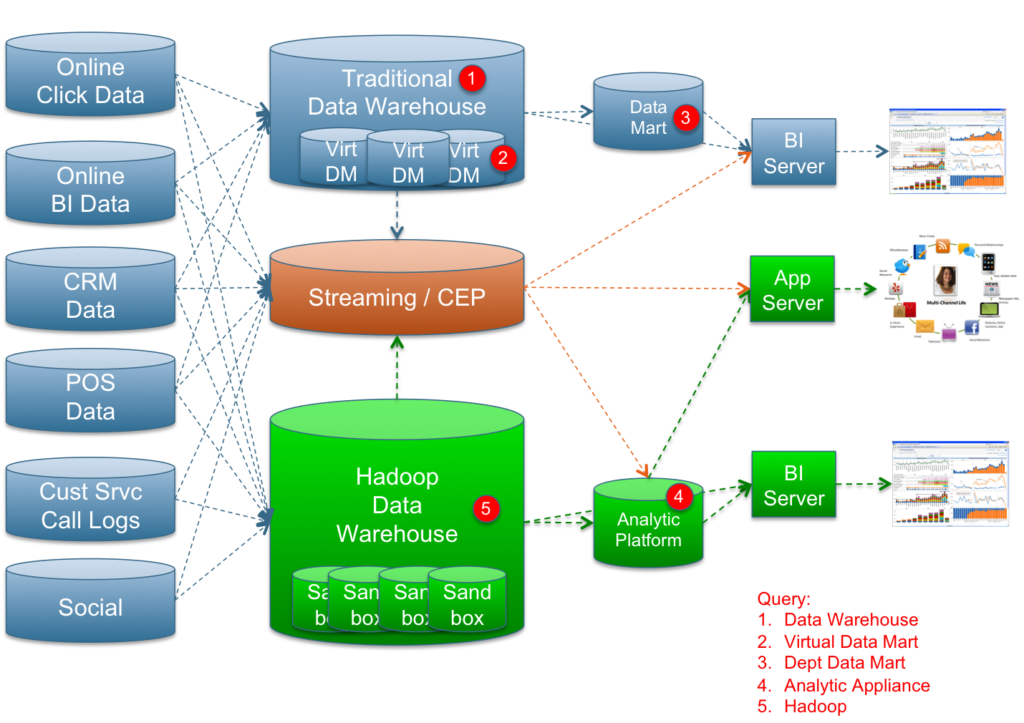

The front-end of the above analytics architecture remains relatively unchanged for casual users, who continue to use reports and dashboards running against dependent data marts (either physical or virtual) fed by a data warehouse.

This environment typically meets much of the information needs of the organization, which can be defined up-front through requirements-gathering exercises. Predefined reports and dashboards are designed to answer questions tailored to individual roles within the organization.

Ad hoc needs of casual users can also be serviced by the traditional data warehouse and data mart architecture. However, the interactive reports and dashboards rely on the IT department or “super users”—tech-savvy business colleagues—to create ad hoc reports and views on their behalf.

Search-based exploration tools that allow users to type queries in plain English and refine their search using facets or categories is one of several ways to allow more business users access to the data without being so sophisticated.

One new addition to the casual user environment are dashboards powered by streaming/CEP engines (real-time reports). While these operational dashboards are primarily used by operational analysts and workers, many executives and managers are keen to keep their fingers on the pulse of their companies’ core processes by accessing these “twinkling” dashboards directly or, more commonly, receiving alerts from these systems.

New Analytics Approach

The biggest opportunity in the above analytics architecture is how it improves the information needs of power users. It gives power users many new options for consuming corporate data rather than creating countless “spreadmarts”. A power user is a person whose job is to crunch data on a daily basis to generate insights and plans.

Power users include business analysts (e.g., Excel jockeys), analytical modelers (e.g., SAS programmers and statisticians) and data scientists (e.g., application developers with business process and database expertise.) Under a new paradigm, power users query either an analytic platform (separate from the enterprise data warehouse) and/or Hadoop directly (the new semi-structured data warehouse).

An analytic platform can be implemented via a number of technology approaches:

- MPP analytic databases (e.g. Greenplum, AsterData)

- Columnar databases (e.g. ParAccel, Infobright, Sybase IQ, Vertica)

- Analytic appliances (e.g. Netezza, Exadata)

- In-memory databases (e.g. Hanna, QlikView)

- Hadoop-based analytics (e.g. Hive, Hbase, Mahout, Giraph)

Which approach or combination of approaches are you currently using or going to use?

Do you think that the Hadoop open source ecosystem will evolve to the point where the other analytic platforms become less relevant (e.g. what happens when the community adds real-time mix-workload support to Hadoop, and develops a comprehensive suite of Hadoop-enabled / parallelized analytic algorithms)?

In an attempt to be controversial, I’m going to predict that Hadoop will expand to provide support for a sophisticated analytics layer which surpasses the performance of all existing analytic platform alternatives.

All these platforms are integrating with Hadoop because Hadoop acts as a great initial data store and ETL pre-processing engine. However, this integration will ultimately lead to their demise as the Hadoop system’s capabilities begin to overlap.