The Big Data Warehouse – The New Enterprise

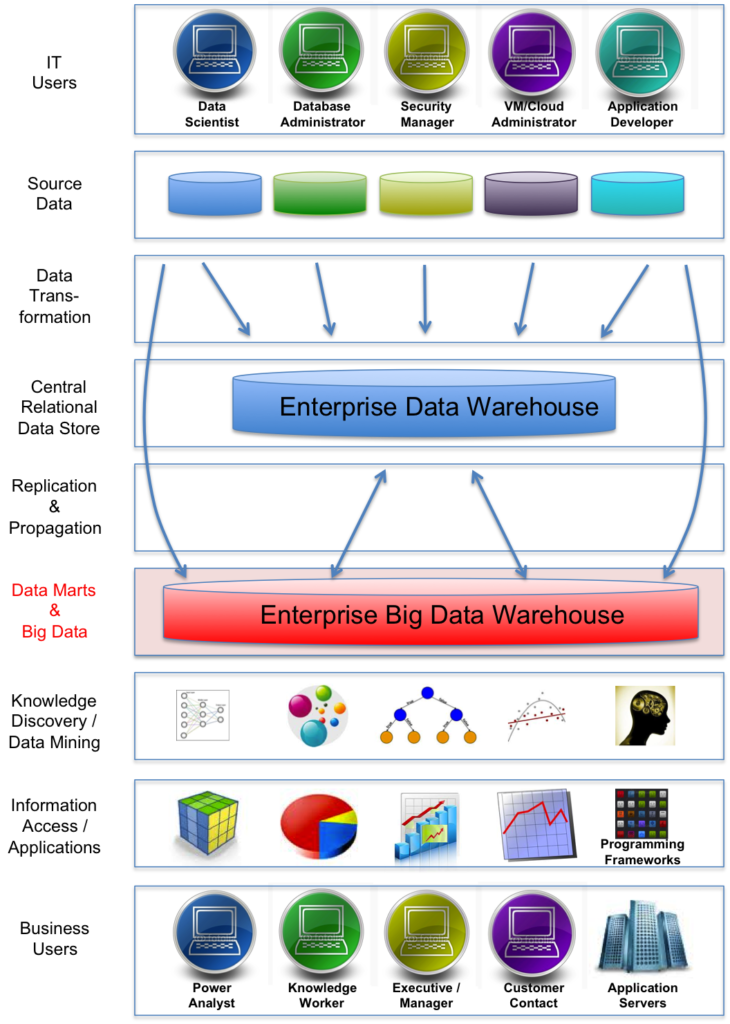

For those familiar with the Fortune 1000 enterprise data warehouse reference architecture, you’ll appreciate how it’s evolving to include Big Data.

We’re seeing a few things repeat themselves, but now with semi-structured data:

- IT needs to address the needs of business users

- There are many new data sources

- Those who can centralize ALL enterprise data will win

- Enterprise groups need sandboxes (aka data marts and now Hadoop data stores)

- Discovery needs to be simplified with user-friendly data mining (now including unstructured)

- Information access is being made self-serviceable (EDW, Big Data, and BI data marts)

- Business users need time-to-market acceleration through app platforms

Note: You’ll note that the new enterprise warehouse architecture shown above depicts a Big Data data store that is much larger than the traditional enterprise data warehouse. This isn’t difficult to comprehend due to the fact that RDBMS data stores are, by nature, structured and compact compared to their new counterpart – the unstructured, NOSQL data stores.

IT needs to address needs of business users

The gatekeepers of the enterprise’s key resource, data, are making that resource available to others in the organization (willingly or not). Business users are beginning to benefit due to two main trends in the IT industry:

- Use of private and public cloud services to automate access

- Use of the Hadoop framework to create new and timely data sandboxes

By making IT infrastructure more transparent, and empowering others to gain access through self-service provisioning of those resources, the organization is capable to be more responsive to market needs. IT is either proactively or reactively breaking down the traditional barriers to data access. Big Data is a great example of being able to quickly spin up proof of concepts and advance thinking without the burden of data schemas, expensive tools and the like.

There are many new data sources

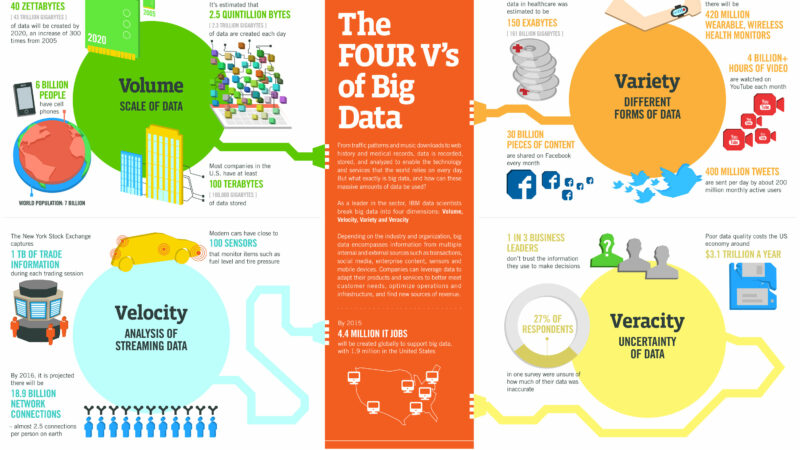

Much of the organization’s data had to be “thrown on the floor” due to the expense of traditional data warehouse infrastructure. Now with web-scale technologies like Hadoop, enterprises can throw that data into unstructured “data crock pots” and “cook up new insights” for the company. With the access to apache web logs, social feeds, M2M, etc., it’s no surprise that IDC predicts that 90% of the the 35 Exabytes of data generated in the year of 2020 will be “unstructured”…lots of new data sources.

Those who centralize data will win

This doesn’t mean that you can’t have data marts. However, I believe that the idea of having a data mart will now begin to evolve to many separate “buckets” of sandbox MapReduce data sets.

Envision a single Hadoop cluster with exabytes of corporate data, responding to thousands of MapReduce jobs every day (being initiated by data scientists as well as knowledge workers and power analysts in the organization). Whether the subsets of data are stored in a single Hadoop file system or transfered between separate Hadoop clusters is not important. What is important is that EVERYONE will have access to any and all data (and without the need for previously setting up queries and schemas to do so). And….there will be a tight connection between the traditional relational data store (EDW) and the Big Data store. Together, the new “single version of the truth”.

Groups need sandboxes

Hadoop exists because Yahoo! needed a place where it’s data scientists and app-dev teams could get access to enterprise data quickly to experiment and discover. Big Data will provide the same value proposition to the new “data-driven” enterprise. It’s time to take the gloves off, roll up your sleeves, get your hands dirty….let the organization have access to the data. Big Data provides that promise. Big Data IS the sandbox.

Discovery needs to be simplified

After starting Teradata’s internal data mining program back in 1996 (almost 15 years) ago, I’m still seeing new data mining offerings provide similar value propositions – instant access to organizational data and simple business-user centric interfaces made to remove the inherent complexities associated with the data mining process. And lets not forget moving the data mining process closer to the data (e.g. leveraging in-database analytics) has been the promise of data mining tools for two decades.

Big Data provides a new platform for knowledge discovery and data mining by simplifying the access to the data. What is still missing is the ability to raise this up to the business user.

My opinion is that discovery, by definition, can not be distilled into a simple workflow that can be automated. Sure you can provide things like visual programming, etc. to allow managers build, train, and deploy their own neural nets (really?)….but the new data scientists, the new application developers, will still want to get their hands dirty. And, lets be honest….business users do not want to execute the data mining process….and the data miners do not want to play with the fluffy icon-driven user interfaces.

However, I do believe there IS an opportunity to develop a new Big Data Data Mining process which is facilitated through a suite of knowledge discovery web services which simplify rapid BI application development – a new Big Data Private PaaS. [Note: what do you expect from me? The PaaS guy. But don’t take my word for it…you can talk to a hundred Fortune 1000 companies and their internal IT, application development, and business user organizations…maybe you’ll hear something different].

Information access is being made self-serviceable

Go figure. I can still remember the “Data Warehouse Readiness Services”, “Data Warehouse Design Services”, and “Data Warehouse Support and Enhancement Services”. But the one that still flashes the most in my mind is the “Data Warehouse Information Discovery Services” where you determine how to link business strategies with information technology….and, of course, this involves building a customized data warehouse or data mart solution.

Well, you may not throw away the architecture, logical data modeling, and the many similar tasks involved in building traditional data infrastructure. But you sure can accelerate the process, providing more immediate access to data. Just ask the many emerging “data scientists” in the organization who are spinning up Big Data Hadoop clusters and playing…discovering….in a matter of days and weeks, versus months and sometimes years.

Don’t believe me? Just ask folks at FaceBook, Linkedin, Twitter, Yahoo!, eBay, etc. See how long it takes them to answer a new business question that requires access to existing or new data elements.

Time-to-market acceleration through app platforms

If you could provide a suite of BI application development services to accelerate time-to-market for new discoveries, new BI applications, new executive insights, what would they be?

Do you think it’s more important to provide access to infrastructure, or tools to facilitate the development of new BI applications for the organization?

Sure, the new enterprise should have both…but those who are more advanced in their thinking are already focused on how to enable application development, providing easy-to-use tools for access to infrastructure (and self-service capable infrastructure). I think the true value is with the application developer – always have, and always will.

This could start out as a number of fixed Hadoop clusters. It could consist of Hadoop cluster stacks which can be provisioned via your Private IaaS offering. It could be a private PaaS of Hadoop-enabled web services. It could be a hybrid Hadoop Cloud.

What do you think?

One thought on “The Big Data Warehouse – The New Enterprise”

Comments are closed.